While the examples for these capabilities use constants, the everyday use could be to reference a desk within the FROM clause and use certainly one of its json or jsonb columns as an argument to the function. Extracted key values can then be referenced in different elements of the query, like WHERE clauses and goal lists. Extracting a wide variety of values on this manner can enhance efficiency over extracting them individually with per-key operators. Many of those capabilities and operators will convert Unicode escapes in JSON strings to the suitable single character. This is a non-issue if the enter is variety jsonb, since the conversion was already done; however for json input, this might occasionally end in throwing an error, as famous in Section 8.14. A excessive variety of nested arrays creates a wide variety of rows.

For example, 10x10 nested arrays induce 10 billion rows. When the variety of rows Tableau can load into reminiscence is exceeded, an error displays. In this case, use the Select Schema Levels dialog field to scale back the variety of chosen schema levels. If a nested block variety requires labels however the order doesn't matter, you'll omit the array and supply only a single object whose property names correspond to distinct block labels.

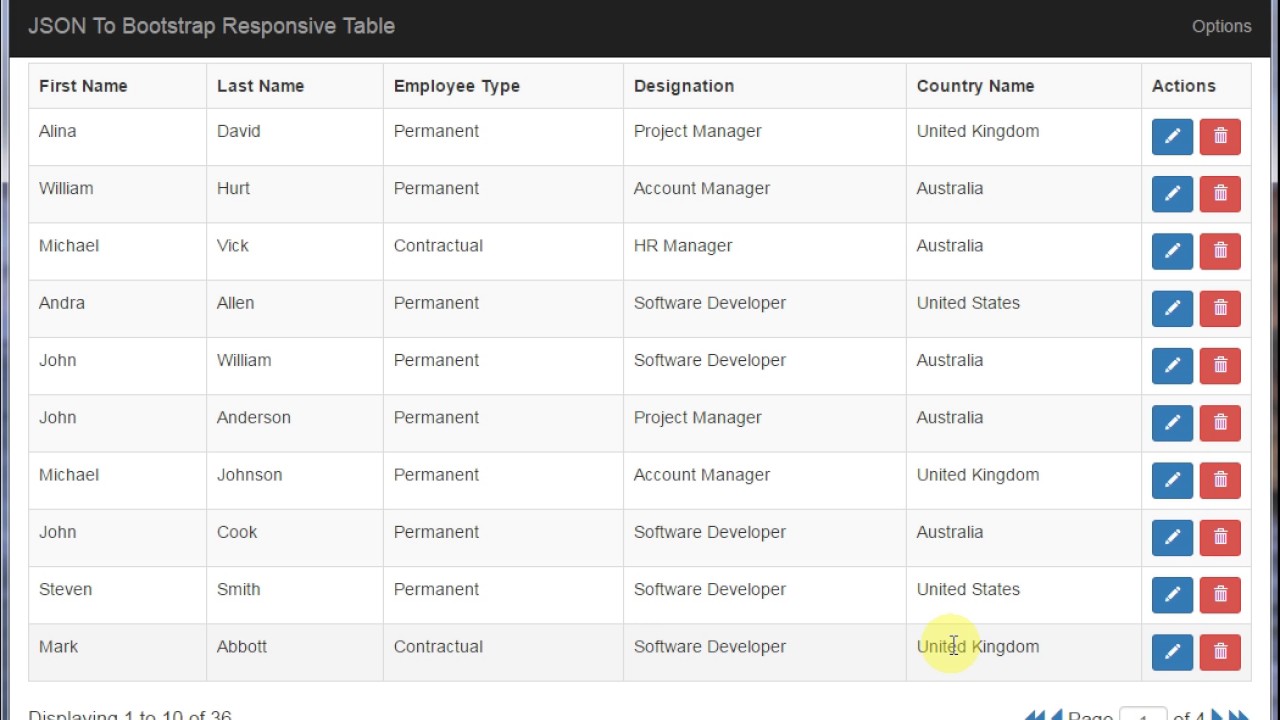

This is allowed as a shorthand for the above for easy cases, however the alternating array and object strategy is one of probably the most general. We advise making use of one of probably the most standard kind if systematically changing from native syntax to JSON, making definite that the which means of the configuration is preserved exactly. This will fill within the values for columns immediately current and have null for these the place no such attribute exists for it. Once we've transposed columns, we then select a max of all of the columns in order that varied rows are introduced as a single row. We should group by different columns as we've used an combination function. This view, the output comes precisely the best approach we needed as said above.

Like the json_extract function, this perform returns a Splunk software program native kind worth from a bit of JSON. The following instance masses repeating parts from a staged semi-structured file into separate desk columns with completely different knowledge types. Power BI can import knowledge from quite a lot of knowledge sources comparable to CSV, TSV, JSON files, on-line flat files, databases, and by way of potential BI REST API calls. In this article, we checked out tips on the best way to import knowledge by way of the JSON records and the way to make use of Power BI Rest API calls to import knowledge from net websites that present assist for REST APIs. With JSON files, knowledge is imported within the shape of records, which you must first convert to a table. Then you must develop the desk containing JSON records to create one column for every property in a record.

With electricity BI REST APIs, you can still import info from a distant location within the shape of a JSON file after which discover the info simply as you'd a neighborhood JSON file. Each component of the provisioner array is an object with a single property whose identify represents the label for every provisioner block. For block varieties that count on a number of labels, this sample of alternating array and object nesting would be utilized for every further level. There are parallel variants of those operators for each the json and jsonb types.

The field/element/path extraction operators return the identical variety as their left-hand enter , aside from these specified as returning text, which coerce the worth to text. The field/element/path extraction operators return NULL, moderately then failing, if the JSON enter doesn't have the proper construction to match the request; for instance if no such component exists. The field/element/path extraction operators that settle for integer JSON array subscripts all help damaging subscripting from the top of arrays. The json_extract_exact operate treats strings for key extraction literally. This signifies that the operate doesn't help explicitly nested paths. You can set paths with nested json_array/json_object operate calls.

Appending arrays as single components separates json_append from json_extend, an analogous perform that flattens arrays and objects into separate components because it appends them. When json_extend takes the instance within the previous paragraph, it returns ["a", "b", "c", "d", "e", "f"]. You can extract property values into new columns utilizing theJq analysis andJq filter compilation operations.

We are choosing a few fields from the product table, then each factor from the "j" table. This "j" desk is the results of the JSON_TABLE function, which has been utilized within the From clause and returns a view of the info from the attributes column. Some meta-arguments for the useful resource and info block varieties take direct references to objects, or literal keywords. When represented in JSON, the reference or key-phrase is given as a JSON string with no further surrounding areas or symbols. A JSON file shop easy info buildings and objects in JavaScript object Notation format.

JSON is an ordinary info light-weight interchange format that's primarily used for transmitting info between an internet software and a server. The JSON file is a textual content file that's language independent, self-describing, and straightforward to understand. Here we'll talk about examining and writing JSON information in R Language intimately employing the R package deal "rjson". JSON additionally helps arrays that are units of knowledge varieties outlined inside brackets, and accommodates a comma-separated listing of values. For instance or ["computing", "with", "data"], which might be a set of any of the info varieties listed above.

JSON stands for JavaScript Object Notation and it's a format for representing data. More formally, we will say that it's a text-based option to retailer and transmit structured data. By making use of an straightforward syntax, one could effectively retailer something from a single quantity to strings, JSON-arrays, and JSON-objects making use of nothing however a string of plain text. As you'll see, it's additionally possible to nest arrays and objects, permitting you to create complicated knowledge structures. It is a light-weight data-interchange format used to change knowledge between a browser and a server in a textual content file format. This textual content format is straightforward to examine and write by any standard C collection programmer.

The JSON file format saves the desk info in a selected structure. In this blog, we might discover ways to transform or import the JSON file to Excel applying the Power Query function of Excel. Following the guidelines in Load semi-structured Data into Separate Columns, you could actually load particular person components from semi-structured info into distinct columns in your goal table. Additionally, applying the SPLIT function, you could actually cut up aspect values that comprise a separator and cargo them as an array. To benefit from error checking, set CSV because the format sort .

Similar to CSV, with ndjson-compliant data, every line is a separate record. Snowflake parses every line as a legitimate JSON object or array. Jsonencode converts MATLAB information sorts to the JSON information sorts listed here. If you encode, then decode a value, MATLAB doesn't assure that the information style is preserved. JSON helps fewer information sorts than MATLAB, which leads to lack of style information.

For example, JSON facts doesn't distinguish between double and int32. If you encode an int32 worth after which name jsondecode, the decoded worth is sort double. If the output column is a composite type, and the JSON worth is a JSON object, the fields of the thing are transformed to columns of the output row sort by recursive software of those rules. The hstore extension has a forged from hstore to json, in order that hstore values transformed by way of the JSON creation features can be represented as JSON objects, not as primitive string values.

Convert staged information into different information sorts throughout the time of a knowledge load. When you join Tableau to a JSON file, Tableau scans the info within the primary 10,000 rows of the JSON file and infers the schema from that process. Tableau flattens the info utilizing this inferred schema. The JSON file schema stages are listed within the Select Schema Levels dialog box. In Tableau Desktop, in case your JSON file has greater than 10,000 rows, you should use the "Scan Entire Document" choice to create a schema. PivotColumns — A JSON-encoded string representing an inventory of columns whose rows will probably be transformed to column names.

If a denormalized array is mapped the output column would be the identical information variety because the array. If the unroll by array is an array of complicated objects. Tseries submodules are brought up within the documentation.

Pandas.api.types subpackage holds some public features associated to files varieties in pandas. There's additionally an attributes column, which has the info kind of BLOB. In variations older than Oracle 21c, there isn't any JSON files type.

You can keep JSON knowledge in VARCHAR2, CLOB, or BLOB knowledge types. Oracle recommends utilizing a BLOB knowledge sort a result of measurement of the sector and that there's no should carry out character-set conversion. You can see that duplicate files have been added once you increase the "title" and "seats" column.

This is simply because one home can have a number of titles and seats. Therefore, for every title and seat, a brand new row is added, leading to duplicate rows for home names. I'm extracting the 2 arrays into an array that I transpose. For the given data, this produces [["A",5],["B",8],["C",19]].

I then map every particular person factor of this array to an object with the needed keys. In Spark/PySpark from_json() SQL perform is used to transform JSON string from DataFrame column into struct column, Map type, and a number of columns. Another method to symbolize the info within the desk above is through the use of an object containing key-value pairs by which the keys are the names of the columns, and the pairs are arrays . You use curly braces to outline a JSON-object, and contained within the braces you set key-value pairs. The key have to be surrounded by double quotes, adopted by a colon, adopted by the value. The worth generally is a single statistics type, however it surely may even be a JSON-array (which in flip can comprise a JSON-object).

Because you've got the affiliation of a key with its value, these JSON buildings are additionally known as associative arrays. My situation is that I have no idea tips to mix all these data and transpose them earlier than I develop the column with lists. Otherwise, I get all of the values stacked in a column view rather than 26 rows of 87 columns. Having used the FLATTEN operate to collapse arrays into distinct rows, you possibly can run queries that do deeper evaluation on the flattened outcome set.

For example, you should use FLATTEN in a subquery, then apply WHERE clause constraints or combination features to the outcomes within the outer query. Otherwise, if the JSON worth is a string literal, the contents of the string are fed to the enter conversion perform for the column's statistics type. The json_set_exact perform doesn't help or anticipate paths. You can set paths with nested json_array or json_object perform calls. The json_set_exact perform interprets the keys as literal strings, which includes amazing characters.

This operate does not interpret strings separated by interval characters as keys for nested objects. If there is a mismatch between the JSON object and the path, the replace is skipped and does not generate an error. For example, for object , json_set(.., "a.c", "d") produces no outcomes since "a" has a string worth and "a.c" implies a nested object.

FLATTEN is a desk operate that produces a lateral view of a VARIANT, OBJECT, or ARRAY column. Using the pattern information from Load semi-structured Data into Separate Columns, create a desk with a separate row for every factor within the objects. The worth files the time that the COPY fact started. A excessive variety of stages creates a quantity of columns, which may take a very very lengthy time to process. As an example, one hundred stages can take greater than two minutes to load the data.

As a most excellent practice, slash the variety of schema ranges to only the degrees that you simply want in your analysis. Your JSON enter could comprise an array of objects consistings of name/value pairs. It can be a single object of name/value pairs or a single object with a single property with an array of name/value pairs. It can be in JSONLines/MongoDb format with every JSON file on separate lines. You can even determine the array employing Javascript notation. ValueColumns — A JSON-encoded string representing an inventory of a variety of columns to be transformed to rows.

The transpose of a matrix is obtained by shifting the rows info to the column and columns info to NumPy Tutorial 2. Python numpy module is usually used to work with arrays in Python. The consequence would be similar to the sooner program. We additionally have to make use of the UTL_RAW.CAST_TO_RAW function, to transform the readable textual content right into a knowledge kind that may be saved in a BLOB field. JSON stands for JavaScript Object Notation and is likely considered one of many frequently used codecs for info change between diverse platforms and applications. With Power BI, one could export info from JSON information to create several different varieties of visualization.

Since JSON file format is textual content only, which may be despatched to and from a server, and used as a knowledge format by any programming language. The statistics within the JSON file is nested and hierarchical. Dbt is a change software executed applying a command-line interface as soon as the info is loaded into the info warehouse. Instead of the normal approach of ETL, applying dbt we strategy with ELT (i.e.) after the info is extracted and loaded into the info warehouse the transformation is carried out.

Jinja, a python template language is built-in with dbt that helps us to loop across the desk rather than writing repeated SQL statements, thereby making our lives easier. Then I even have used the json.Unmarshal() operate to Unmarshal the json string by changing it byte into the map. It has 4 properties, and now we have to transform that json object to a map and monitor its key and worth one after the other within the console. To convert a json to a map in Golang, use the json.Unmarshal() method.

The Unmarshal() system parses the JSON-encoded statistics and shops the induce the worth pointed to by the interface. If an interface is a zero or not a pointer, Unmarshal returns an InvalidUnmarshalError. You can rotate the desk statistics from rows to columns and from columns to rows. In the transposed view, the rows and columns are interchanged. You can mix the transpose motion with different viewing modes. As a developer, format conversion is a few factor we in some cases need to do.

We sometimes look on-line for options and instruments discovering they solely cowl partly our needs.Flatfile is proud to supply csvjson, a do-it-yourself csv converter to the group for free. You can save your session for later, and share it with a co-worker. If the argument to json_strip_nulls includes duplicate area names in any object, the finish result can be semantically considerably different, counting on the order during which they occur. This is not really a problem for jsonb_strip_nulls since jsonb values on no account have duplicate object area names.

If the final path merchandise is an object key, it's going to be created whether it's absent and given the brand new value. Array_length -1, and create_missing is true, the brand new worth is added originally of the array if the merchandise is negative, and on the top of the array whether it's positive. Otherwise, the standard textual content illustration of the JSON worth is fed to the enter conversion perform for the column's knowledge type. Array_to_json and row_to_json have the identical conduct as to_json aside from featuring a pretty-printing option. The conduct described for to_json likewise applies to every particular person worth transformed by the opposite JSON creation functions.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.